AI is Not a Friend

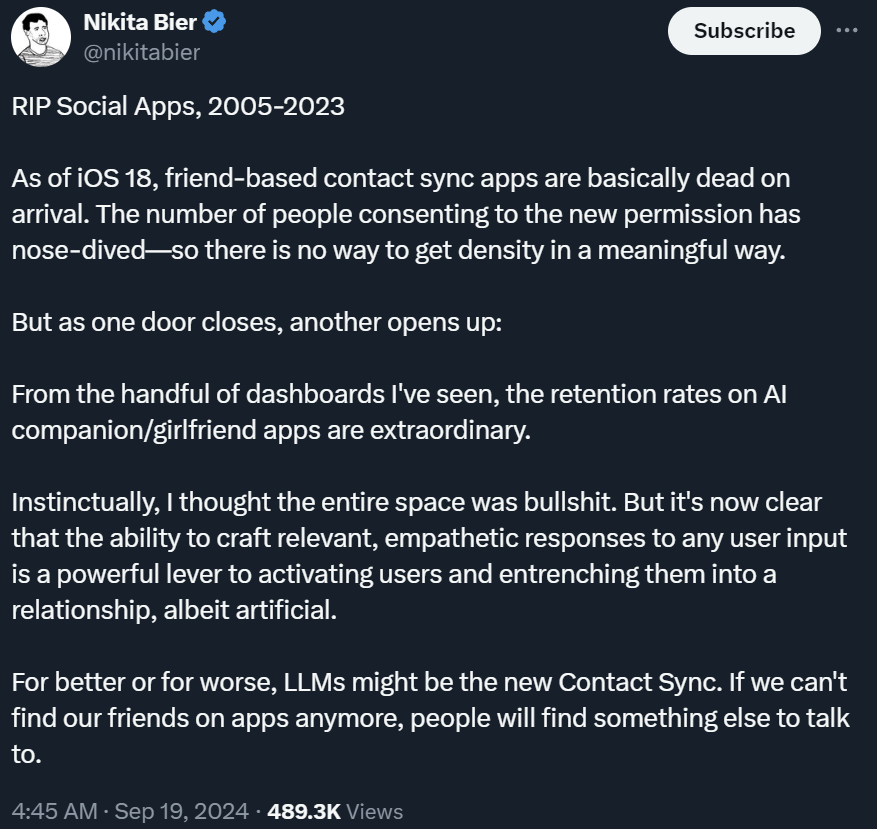

The following tweet might easily be one of the most unintentionally dystopian posts I've seen.

At first, it seems fine. Changes to APIs on iOS can harm the app ecosystem and what's possible for developers. But this part sticks out.

Have you seen it yet?

a powerful lever to activating users and entrenching them into a relationship, albeit artificial.

"Albeit" is doing a lot of heavy lifting there.

Social apps of course need to figure out how to grow and keep users at some point but isn't the main goal here connecting people? "activiating and entrenching" is not a very social motive for building a social app.

However if your motivation is to build social products that grow quickly and make a splash, he's 100% right. The incentives are there for founders and growth marketers to exploit the potential of the human-like responses LLMs can generate to find success. We saw the Friend necklace receive $2.5 million in funding and a ton of press after its reveal trailer went viral.

But just because it can be done, should it be done?

I think it's a question that's too often ignored in the tech space. There are lots of cool ideas that can be successful but would have a negative impact on society at large.

We all know the value of human connections. They can even impact our life expectancy. Social products therefore can provide significant value when done well.

Originally Facebook and other early social networks provided a new means to form and maintain connections. You no longer had to be physically near someone to get some of the value of having them in your life. Those positive qualities have waned but they are still there if you can look past all the bad stuff. No one wants to return to writing letters.

An AI-driven social experience though?

I'll look at the positives first. It could provide some level of social connection that gives people some percentage of the value they get from real relationships. That's better than zero connections.

But the negatives...

Would these AI experiences eventually replace real connections for a lot of people? Online social networks have in some way done that already and it has had detrimental effects. Young people spend less time physically together and more time maintaining a well-manicured online persona. A study of Facebook usage as it rolled out in the 00s showed it led to 2% of students becoming clinically depressed. Each individual had a decrease in mental health which was roughly 22% of losing their job.

Can we say an AI-driven experience would be better?

Let's not forget how LLMs work. They simply regurgitate the content they were trained on. No validation or creativity is going on here. If a situation is unique enough, an LLM can't think on its feet and find an appropriate response.

How would an LLM respond to someone who's depressed or suicidal? It may say all the right things but as someone's only connections, it'll be much easier to get trapped in a negative spiral of thinking they're alone in the battles they face. There's no opportunity to relate to another human being and feel less alone.

So should it be done?

I think it depends on your stance on the moral responsibilities each person has. If we think Facebook and Instagram has hurt society what do we think an AI blackbox of unlimited fake friends will do? And if we think a product is likely to have a negative effect on society should we not be obliged to find a solution or move on from this idea?

I know, I know, that's not the world we live in.

But of it were up to me, I'd try to find a solution to the contacts sharing "problem" instead.